In a previous post on Babble's development, I mentioned

that the SubstituteAndReturn plugin was slow. Part of this is because the

PPR grammar can find the substitution/transliteration operators

but not provide the information about where in an expression they are

which is needed to determine if the operation is contextual on

the $_ variable.

However before I jump into optimising that, it is good to profile the code to

find where it is being the slowest. I fired up

Devel::NYTProf and found that the code was taking the longest under Perl v5.26 with the CORE:regcomp subroutine

(Perl's internal regexp compilation). Usually, when a regexp does not contain

any interpolated variables this is done at compile time; however, since Babble

constructs regexps at runtime, this CORE:regcomp is called many times. So I

added caching of the compiled regex

in two places where the profiler output indicated the most time was being spent. This immediately led to a

30%-70% reduction in execution time for tests depending on how heavily the code generates grammar

regexps (with the greatest reduction for the postfixderef and

substituteandreturn tests). Processing Dist::Zilla and its dependencies

went from 15 minutes down to 9 minutes, but this result is not using the cache

to its full potential because I currently process each file in separate

interpreters so the cache is per-file instead of over the entire run.

Returning to the SubstituteAndReturn plugin,

the approach used in Babble-0.090008

was looping over the document and inserting a $_ =~ explicit binding

until there were no more places a binding could be inserted.

Upon looking more closely at how the code ran,

I realized that the combination of each_match_within(Expression => ... )

with the pattern that I used could only find one position to place that

explicit binding per iteration; it was doing too much and this was slowing it

down. So I replaced that with each_match_of( Expression => ... and extract the

substitution/transliteration position myself in this PR.

This reduces the processing of Dist::Zilla from 9 minutes to 6 minutes.

I then created a continuous integration setup to make sure that it worked across Perl versions. This is is when I discovered something shocking! The tests were running up to 10 times longer on Perl 5.22–5.36 relative to 5.10–5.18 (40 versus 4 seconds overall). I knew that this slowdown is documented as a PPR limitation but I was not timing just how much slower it was until now. I ran the profiler again and I saw that the slowdown was entirely attributable to regex compilation not matching speed.

This means I should be able to use the caching that I added to make up for the

difference as long as all files are modified in a single process. I rewrote the

bash script that uses GNU Parallel in a single-file-per-process fashion

to a Perl script that uses MCE which uses

fork(2) by default on Unix-likes. While this does introduce IPC overhead, it

turned out to be faster than repeatedly spawning processes. Using fork(2)

opened up another option for optimisation: I could warm up the cache prior to

the fork(2) and use that cache in the child processes — now we're cooking. I

added cases to warm up the cache until the child processes were no longer

reporting cache misses.

When running Babble across many files, it quickly becomes apparent that most

files are unchanged by the plugins so you can gain a speed up by adding a small

check for the syntax being targeted by the plugin. I added these as "early" and

"late" bail outs which can be turned off using an environment variable. These

are meant to be fast checks that look for short matches without using the full PPR grammar.

Early bail outs look over the entire document while late bail outs are used

inside the transformation.

For example, the SubstituteAndReturn early bail out looks for m/ \b (?: s|y|tr ) \b /xs

in the entire document and the PostfixDeref late bail out looks

for m/ \s* -> \s* [\@%\$] /xs inside of matches for the PerlTerm rule.

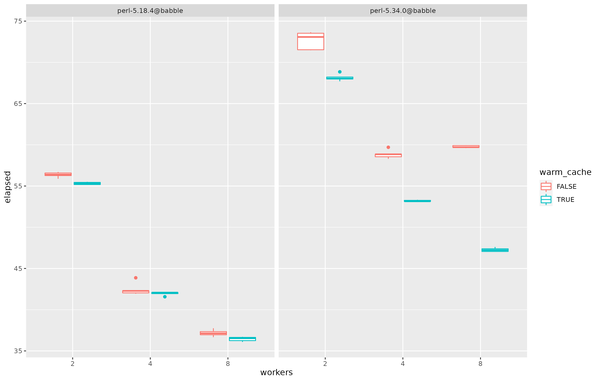

Any purported optimisation needs to be benchmarked. So how do all these optimisations interact? I could run the various options for caching, Perl version, bailing out, etc. multiple times myself and find their mean, but it would be nicer to script that. So I used Permute::Named::Iter to record elapsed time for various options. The speed ups from enabling caching and bailing out are so great and preliminary tests showed significance so what this left me with is testing (Perl version) x (warming cache) x (number of workers). While I know that the older Perl version will run faster serially, I want to compare to see if I did not inadvertently slow it down and if my optimisations bring the elapsed time over newer Perl versions close to that of old Perl versions. I get the following data sorted by mean elapsed time:

> model <- elapsed ~ version * workers * ( cache * warm_cache + bail_out_early + bail_out_late )

> agg <- aggregate(model, mean, data = data )

> agg.sort.elapsed <- agg[order(agg$elapsed),c('version','warm_cache','workers','elapsed')]

> print(agg.sort.elapsed)

version warm_cache workers elapsed

11 perl-5.18.4@babble TRUE 8 36.446

5 perl-5.18.4@babble FALSE 8 37.170

9 perl-5.18.4@babble TRUE 4 41.960

3 perl-5.18.4@babble FALSE 4 42.490

12 perl-5.34.0@babble TRUE 8 47.266

10 perl-5.34.0@babble TRUE 4 53.192

7 perl-5.18.4@babble TRUE 2 55.320

1 perl-5.18.4@babble FALSE 2 56.364

4 perl-5.34.0@babble FALSE 4 58.852

6 perl-5.34.0@babble FALSE 8 59.772

8 perl-5.34.0@babble TRUE 2 68.158

2 perl-5.34.0@babble FALSE 2 72.652

and the following boxplot:

|

Since the data does not fit the assumptions for using ANOVA (elapsed time in the data has non-equal group variances), Aligned Rank Transform (ART) is used (I would have liked to do more runs and to more carefully control the environment for each run, but I didn't like waiting as they have to run serially on the same computer in order to be independent samples!):

> model.reduced <- elapsed ~ version * workers * ( warm_cache )

> art.reduced <- art( model.reduced, data )

> fit.reduced <- anova( art.reduced )

> print(fit.reduced)

Analysis of Variance of Aligned Rank Transformed Data

Table Type: Anova Table (Type III tests)

Model: No Repeated Measures (lm)

Response: art(elapsed)

Df Df.res F value Pr(>F)

1 version 1 48 151.892 < 2.22e-16 ***

2 workers 2 48 202.148 < 2.22e-16 ***

3 warm_cache 1 48 148.590 2.6388e-16 ***

4 version:workers 2 48 56.041 2.7902e-13 ***

5 version:warm_cache 1 48 151.381 < 2.22e-16 ***

6 workers:warm_cache 2 48 101.621 < 2.22e-16 ***

7 version:workers:warm_cache 2 48 123.130 < 2.22e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

In terms of main effects, the number of workers is the greatest predictor of elapsed time. Therefore with the current implementation, warming the cache helps a little bit, but less than adding more workers which makes sense as those workers will each fill the cache themselves as soon as they reach input that can be transformed. Warming the cache might help more when there are many more files processed (currently the code uses a plugin on one distribution at a time instead of all files across all distributions).

All these changes are available as:

Appendix: List of issues and pull requests participated in along the way

Babble

- Cache generated grammar regexps to improve performance by zmughal · Pull Request #1 · zmughal/Babble · GitHub

- SubstituteAndReturn: Faster search for contextual s///r, y///r by zmughal · Pull Request #2 · zmughal/Babble · GitHub

- GitHub Actions CI workflow by zmughal · Pull Request #3 · zmughal/Babble · GitHub

- Add no re 'eval' in order to keep flag within scope by zmughal · Pull Request #4 · zmughal/Babble · GitHub

- SubstituteAndReturn: Use (*MARK:NAME) control verb for lookup by zmughal · Pull Request #5 · zmughal/Babble · GitHub

- SubstituteAndReturn: extract chained regex outside loop by zmughal · Pull Request #6 · zmughal/Babble · GitHub

- Add heuristic optimisations and associated toggle flags by zmughal · Pull Request #7 · zmughal/Babble · GitHub